- Retrait en 2 heures

- Assortiment impressionnant

- Paiement sécurisé

- Toujours un magasin près de chez vous

- Retrait gratuit dans votre magasin Club

- 7.000.0000 titres dans notre catalogue

- Payer en toute sécurité

- Toujours un magasin près de chez vous

Description

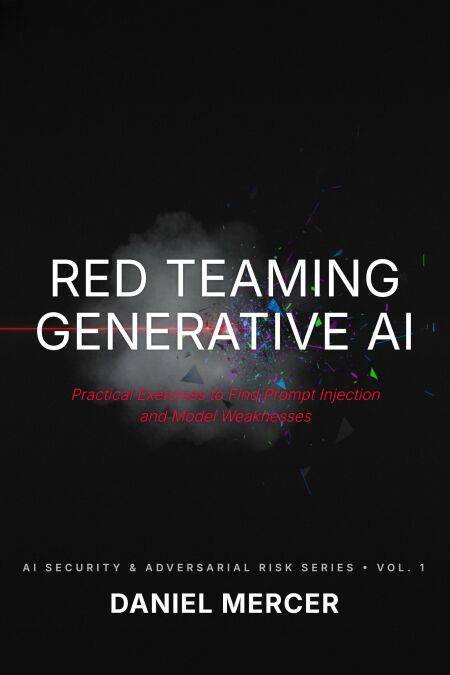

Generative AI systems introduce new attack surfaces that traditional security testing does not cover. Red teaming is essential to uncover how large language models fail under adversarial conditions.

Red Teaming Generative AI is a hands-on guide for security testers and engineers tasked with identifying weaknesses in LLM-based systems. The book focuses on practical testing techniques rather than theory, providing repeatable exercises that mirror real-world attack scenarios.

It explains how to structure red team engagements specifically for generative models and how to translate findings into actionable risk decisions.

Readers will learn how to:

Design red team scopes for LLM-powered applications Execute prompt injection, jailbreak, and data extraction tests Evaluate model behavior under adversarial inputs Define metrics for model robustness and failure severity Document findings using clear, repeatable reporting formats Communicate results to engineering and risk stakeholdersThis book equips practitioners with the tools needed to proactively test generative AI systems before attackers do.

Spécifications

Parties prenantes

- Auteur(s) :

- Editeur:

Contenu

- Langue:

- Anglais

- Collection :

Caractéristiques

- EAN:

- 9798233289859

- Date de parution :

- 02-01-26

- Format:

- Ebook

- Protection digitale:

- /

- Format numérique:

- ePub

Seulement chez Librairie Club

Les avis

Nous publions uniquement les avis qui respectent les conditions requises. Consultez nos conditions pour les avis.